Evidence of uneven quality in the US health care system is well established. Everyone knows the greatest hits. The Dartmouth Atlas of Health Care (1995) documented wide geographical disparities in utilization and spending, suggesting fundamental flaws in clinical decision making and over-use. McGlynn et al. (NEJM, 2003) described major quality deficits on primary care and management of chronic illness. With regards to hospital-based care, the Institute of Medicine (1999) estimated that as many as 98,000 Americans die annually as a result of medical errors. Wide variation in surgical mortality rates across hospitals and physicians suggests that preventable harm in hospitals is far greater than that. The search for strategies to improve health care quality on a national scale goes on. To date, strategies imposed “top down” on the delivery system have had limited effectiveness. Public reporting, though steadily increasing over time, has to date had little impact on how patients choose their hospitals and physicians. Early generations of pay for performance plans from commercial payers and CMS were successful in increasing compliance with targeted practices and standards, but their impact on patient outcomes remains unproven. Newer models of value-based reimbursement have much stronger financial incentives, but it is doubtful that incentives alone will be successful in catalyzing new and improved models of care. “Bottom up” initiatives originating within health systems reflect the truism that all quality improvement is local. But improving care one hospital or one physician practice at a time is slow and uneven. It’s also hard to learn from yourself—without benchmarks or new ideas from the outside. In this blog, I’ll lay out a middle ground, whereby hospitals and physicians from different systems share data and collaborate to improve care. I’ll describe my experience at Dartmouth and Michigan, and lessons learned in applying regional collaborative quality improvement at the regional and state levels. I’ll then consider opportunities and challenges in scaling this model up to the national level, based on my current work with Sound Physicians.

Developing the blueprint

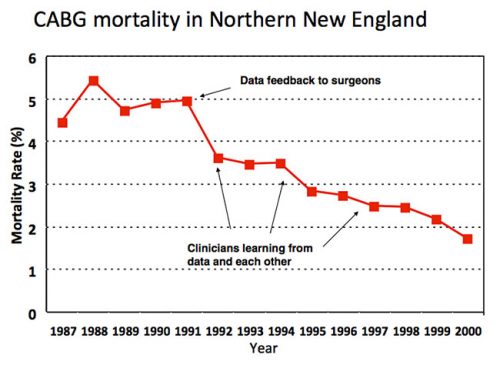

When I arrived in Hanover NH for my surgical internship in 1989, the chief of cardiac surgery at Dartmouth Hitchcock Medical Center, Dr. Stephen Plume, was apoplectic. The Health Care Financing Administration (now Center for Medicare and Medicaid Services) had just publicly released hospital mortality rates based on national Medicare data and DHMC was ranked near the very bottom. Plume was convinced that the rankings reflected bad data—that noisy claims data and subpar risk adjustment had failed to account for the fact that DHMC was operating on higher risk patients than its peers. The Northern New England Cardiovascular Disease Study Group was founded to set the record straight. Led by Plume and Dartmouth epidemiology professor Gerry O’Connor, cardiac surgeons and cardiologists from 5 hospitals in Maine, New Hampshire and Vermont began to systematically collect data about their patients, practices, and outcomes. The data were externally audited (to ward off any suspicions about cheating) and clinically detailed enough to allow for rigorous case mix adjustment. Regrettably, at least for Plume, the NNE group’s analysis of its first 3,000 CABG patients indicated that HCFA’s previous analysis was basically correct: Dartmouth really did have surgical mortality rates 2- or 3-times higher than its regional competitors. With Dartmouth cardiologists threatening to start referring patients elsewhere, Plume needed to address concerns about his own program. The NNE group quickly pivoted and refocused its goals around improving care across the region, rather than fighting HCFA. By then a health services research fellow with the NNE group, I had a front row seat to its improvement work. Step one was performance feedback, giving every hospital and surgeon detailed information (still confidential) about his outcomes against hjs peers’ (all the surgeons were men at that time). Almost immediately, region-wide mortality rates with CABG fell by almost one-third, from 5.0% to 3.5%. Because this decline preceded any measurable change in practice, I asked my mentor whether this was simply Hawthorne effort. Retorted Professor O’Connor: “John, I worship at the altar of Hawthorne.” Step two involved surgeons and their teams learning from each other. Every hospital sent a delegation for a 1-day site visit to every other hospital. The surgeons spend most of their time watching their colleagues operate; the cardiac nurses were mostly in the intensive care units and wards. On almost every visit, the teams picked up a handful of “good ideas” and “tricks” for redesigning care. And third, the NNE group started learning from their data. Leveraging a very large, detailed clinical registry, epidemiologists and statisticians began to explore relationships between specific processes and outcomes, addressing fundamental clinical questions: When should I stop heparin anticoagulation and beta blockers before surgery? In which patients is an internal mammary graft imperative? Intraoperatively, which drugs and regimen for myocardial protection work best? How fast can I safely extubate patients after surgery? Based on systematic empirical analyses, the group began to delineate optimal practices and implement them uniformly across the region. End results of the NNE group were remarkable. Variation in mortality rates—originally 3-fold and 5-fold across hospital and surgeons, respectively—fell dramatically. More importantly, region-wide mortality had plummeted to less than 2% by 2000. Of course, the safety of cardiac surgery improved steadily during the 1990s across the United States. But according to a national study by Peterson et al. (JACC, 2000), NNE topped the charts in terms of both overall mortality and rates of improvement. As I reflect on the early success of the NNE group, I recognize some of its early advantages. It focused on two specialties—cardiac surgery and interventional cardiology—with readily measured processes and outcomes, like mortality. It also had geography in its favor. The five hospitals in northern New England were close enough to allow for regular, regional meetings and the fostering of friendships, collaborative relationships, and trust. At same, the hospitals were at least 60 miles apart—and, for the most part, separated by mountains! Thus, collaboration was not easily co-opted by competition. Whether this experiment could be scaled was doubted by many.

10x collaborative quality improvement

In 2003, Dr. Nancy O’Connor Birkmeyer, a clinical epidemiologist in the mold of her father (yes, same O’Connor) and I moved to the University of Michigan and met Dr. David Share, a senior executive responsible for quality at Blue Cross Blue Shield of Michigan. BCBSM had been disappointed by the early results of its Pay for Performance (P4P) programs. Aside from aiming to accelerate quality improvement and reduce costs, Share aspired to a value-based reimbursement model that better engaged Michigan providers and connected them less confrontationally to the largest commercial insurer in the state. Several years earlier, having learned about the work of NNE group, Share authorized a Michigan-wide collaborative improvement program focused on interventional cardiology. This pilot was built on the same cornerstones: a rigorous clinical registry, confidential performance feedback, and quarterly meetings involving all participating hospitals, cardiologists, and staff. BCBSM funded the effort, including the coordinating center and reimbursements to hospitals for clinical data abstraction and participation in improvement work (total about $2m/yr). It was careful to remain a “neutral convener” and was not privy to any provider-specific performance data. Buoyed by strong clinical results of the cardiology pilot and enthusiasm from participants, BCBSM decided to go all in in 2005 as the Michigan Value Partnerships program. It began to add new programs targeting the biggest and most costly clinical specialties, spanning medical and surgical care in both inpatient and ambulatory settings. There are currently over 15 collaborative improvement programs. As reported in Health Affairs (Share et al., 2011), BCBSM’s investment in regional collaborative improvement was paying off with better outcomes for patients and, in many cases, lower costs. For clinical specialties with national registries and thus reliable benchmarks for patient outcomes (cardiac, general and bariatric surgery), Michigan as a state moved from about average to the top 10 percent of hospitals nationwide. Over recent years, BCBSM has tweaked the model from purely “pay for participation” to one whereby hospitals and physicians are rewarded for their results—in terms of both excellence and improvement. (Total incentive payments exceeded $100m / year across the state.) With the Michigan Value Collaborative (the program I founded and ran until 2014), BCBSM also began focusing more intently on reducing costs around episodes of hospitalization, including post-acute care. Based on my own observations, the Michigan Value Partnerships programs were able to replicate the success of the NNE group, despite considerably more programmatic breadth and complexity. While encompassing 10 times as many hospitals and providers from a much more competitive environment, most of the programs succeeded in developing robust clinical registries and enough social capital with physicians to foster collaborative learning and real practice change. Some of the programs did some remarkably difficult things. The bariatric surgeons, for example, submitted videotapes of themselves operating and allowed their peers to rate their technical skill! (NEJM, 2013) Every bariatric surgeon in the state is now participating in a peer-based coaching program. Admittedly, the Michigan programs were only possible because BCBSM stepped in as a catalyst, convener, and funder. Its willingness and ability to do so was no doubt influenced by its status as the dominant (70% market share) private insurer in the state. Thus, BCBSM had the confidence that the benefits from its investment would accrue disproportionately its own beneficiaries. Whether this model can scaled up to a national level and applied in more diverse practice settings has yet to be established.

Is 100x really feasible?

Three months ago I joined Sound Physicians as its Chief Clinical Officer. Sound is a physician-led organization specializing in managing quality and cost around acute episodes of care. It comprises over 2500 hospitalists, intensivists, and emergency medicine physicians with practices in approximately 225 hospitals nationwide. Like any health delivery system, providing high-value health care is central to Sound’s vision and mission. It is also core to its business success. Differentiating itself from competitors on quality, patient experience, throughput and cost is central to its ability to attract and retain hospital partners as clients. Continuous improvement is also essential to Sound’s success with value-based reimbursement models, including CMS’ Merit Incentive Payment System (MIPS) for professional payments and risk-based episode payment models. Sound has several advantages that would facilitate data-driven, collaborative improvement on a national scale. To fuel performance feedback to clinicians, it has a well-established data infrastructure that integrates clinical data from point of care, hospital administrative data, and, in most instances, Medicare claims data around hospital episodes. It has an operating model and its own IT platform that supports standardized clinical work flows—readily configurable to disease-specific pathways and best practices. And finally, Sound has a strong culture of developing strong physician leaders and a willingness to experiment with innovative care models. At the same time, I’m anticipating several challenges in driving data-driven, collaborative improvement. Improvement implies care re-design and changes in how physicians practice. The latter generally requires leadership, good data and lots of persuasion. Given the scale of NNE and Michigan, one charismatic improvement leader could establish relationships with individual clinicians and appeal to them directly. Because Sound’s practices are so dispersed nationally, such relationships are not practical. Another barrier is workforce shortages in acute care medicine, particularly hospital medicine. Many hospitals experience physician turnover rates exceeding 25% and rely heavily on temporary labor. Establishing a culture of continuous learning and improvement will always be challenging in that environment. Other organizations with aspirations to accelerate improvement on a national scale will face these and other challenges. Such groups include not only national physician organizations and hospital systems, but also professional societies aiming to translate leverage specialty-specific clinical registries into meaningful improvement. Overcoming those barriers will require creative solutions for developing physician leaders with the right skills, virtual models for engaging with and motivating frontline clinicians, and, in most instances, aligned compensation incentives. I look forward to sharing observations and lessons learned in each of these areas in future blogs.